Lora Low Rank Decomposition

Lora Low Rank Decomposition - Lora low rank adaptation of large language models youtube. Lora low rank adaptation of large language models hugging face lora

Lora Low Rank Decomposition

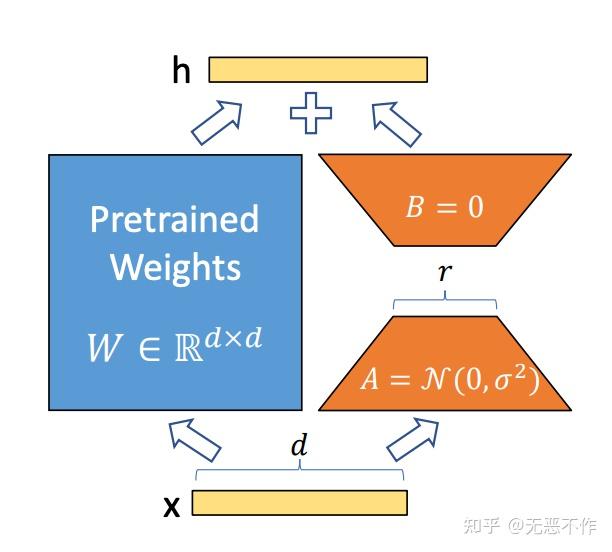

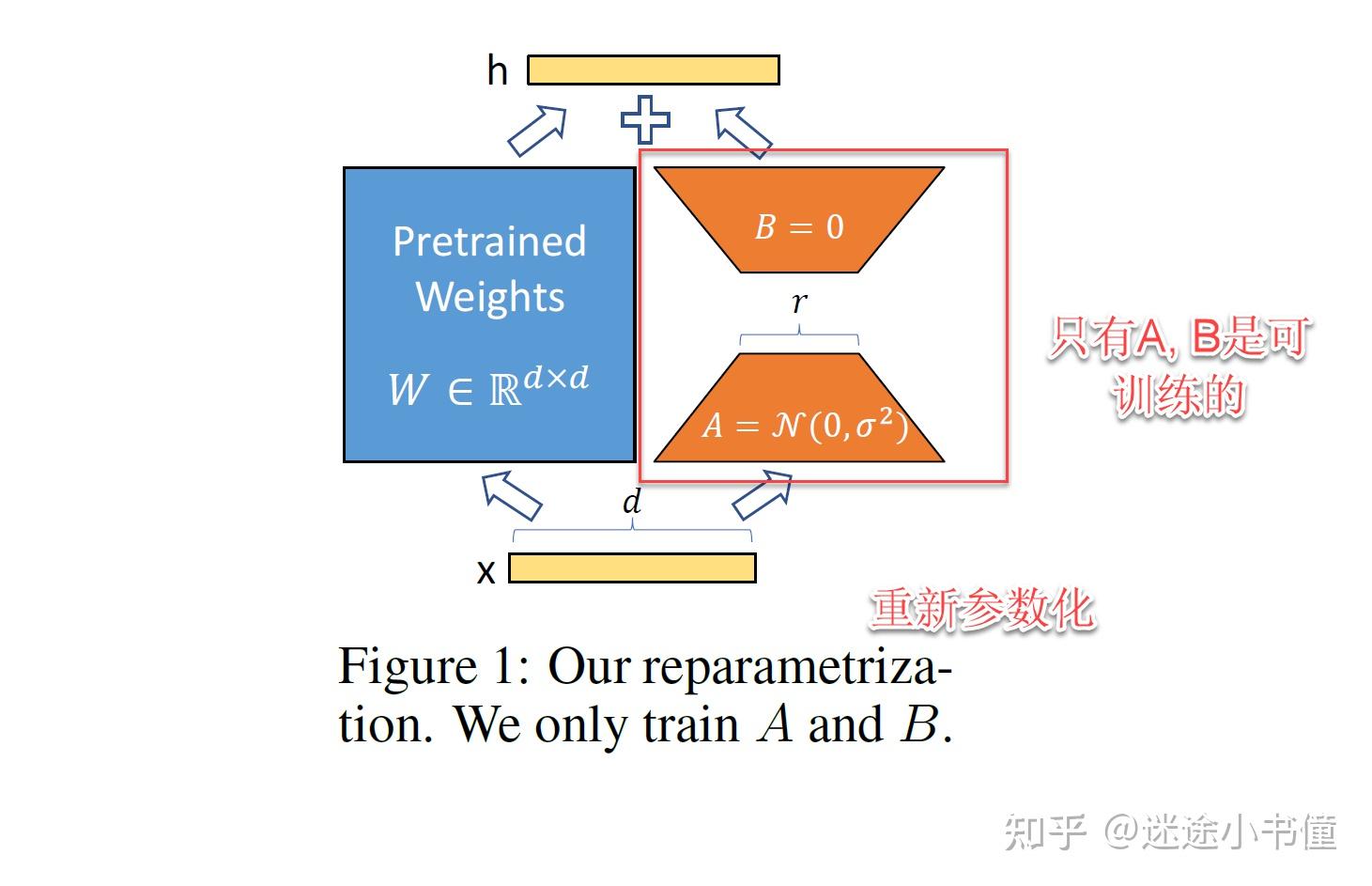

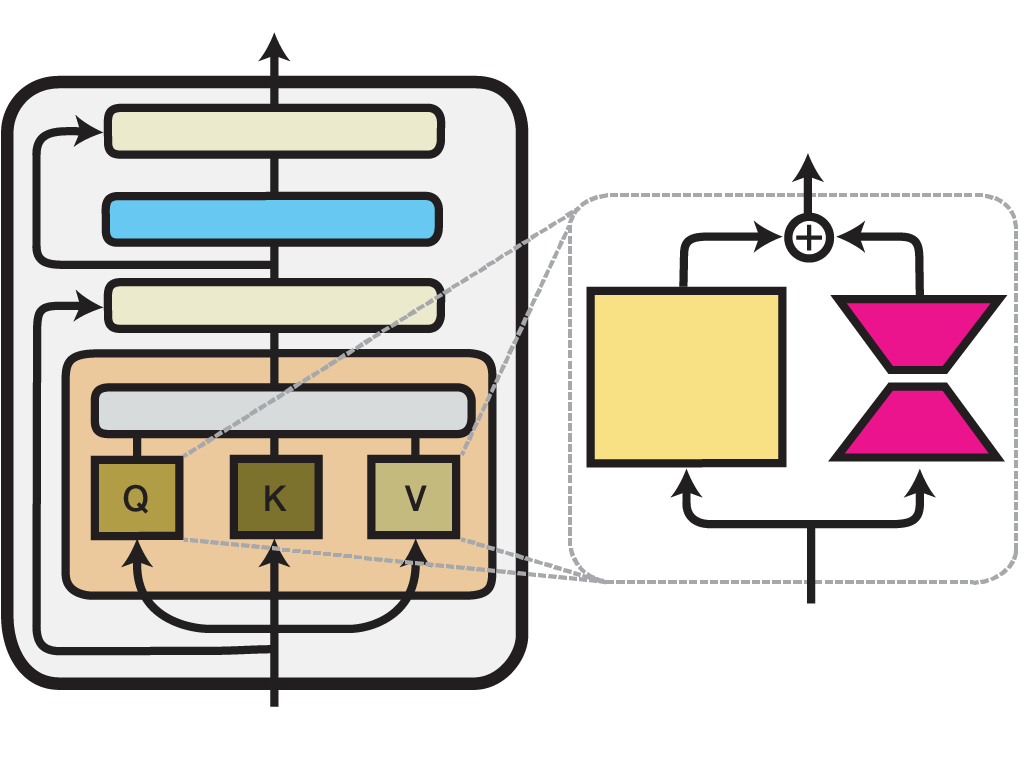

lora A B 0 LoRA微调的实用技巧 在使用LoRA(低秩适应)进行大型语言模型(LLM)微调时,有几个实用技巧可以帮助提高效率并节省计算资源和内存。 LoRA的核心思想是通过冻结模型的原始权重, …

LoRA Low Rank Adaptation Of Large Language Models YouTube

LoRA Low Rank Adaptation Of LLMs Explained YouTube

Lora Low Rank DecompositionLoRA 架构变化示意图 背景 大型语言模型(LLMs)虽然在适应新任务方面取得了长足进步,但它们仍面临着巨大的计算资源消耗,尤其在复杂领域的表现往往不尽如人意。为了缓解这一问 … LoRa Transformer LoRa LoRa

Gallery for Lora Low Rank Decomposition

Low rank Adaption Of Large Language Models Explaining The Key Concepts

LoRA Low Rank Adaptation Of Large Language Models

Insights For Artificial Intelligence All Things AI

Hugging Face LoRA

LoRA Low Rank

Paper Page LQ LoRA Low rank Plus Quantized Matrix Decomposition For

Understanding LoRA Low rank Adaption Of Large Language Models

Using LoRA For Efficient Stable Diffusion Fine Tuning

Adapter Methods AdapterHub Documentation

Understanding LoRA Low Rank Adaptation Gary Gan